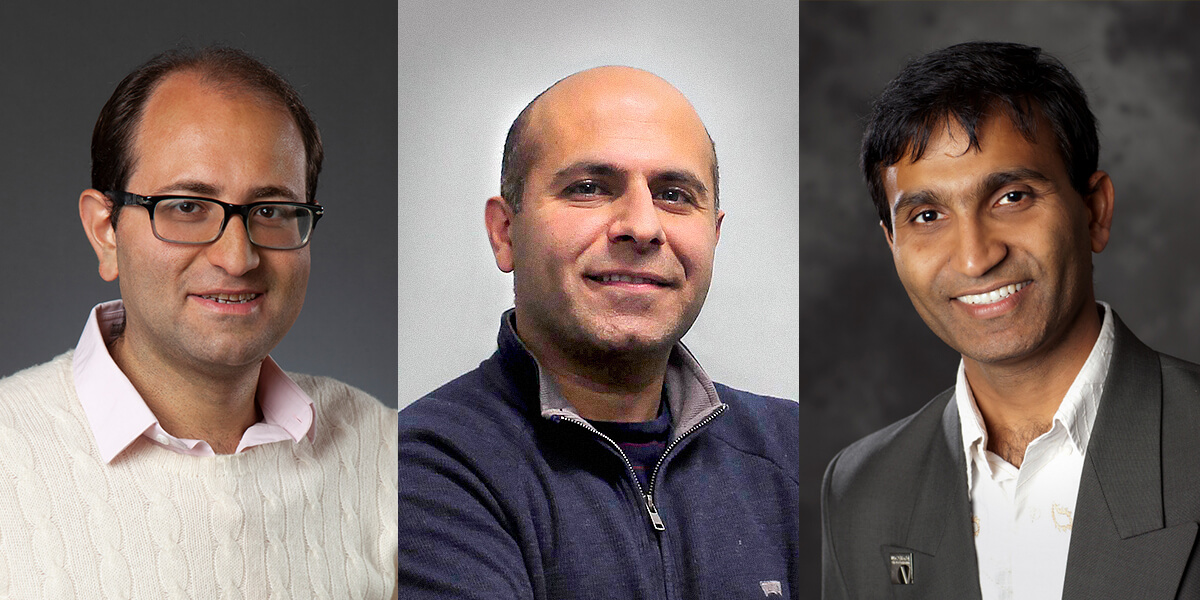

P.I. Salman Avestimehr (center), with Andrew and Erna Viterbi Early Career Chair Mahdi Soltanolkotabi (left) and Murali Annavaram (right), seek to improve innovation in A.I. (PHOTO CREDIT: USC Viterbi).

Design, test, analyze, repeat. Whether it’s Archimedes working on a compound pulley, or Andrew Viterbi developing the algorithm that opened the door to modern communications, this is how engineers have always innovated. But that seemingly simple process has posed a challenge to one of the most important engineering tools today: Machine Learning and Artificial Intelligence. Now, three Ming Hsieh Department of Electrical and Computer Engineering professors have won a 4-year, $2 million DARPA grant to address the problem. Salman Avestimehr, principal investigator, along with Professors Murali Annavaram and Mahdi Soltanolkotabi will be working on new algorithmic solutions to better test and train machine learning models for artificial intelligence.

First, a little background into machine learning in general. In a nutshell, machine learning is a set of algorithms which are modeled after the human brain and are designed to take in large amounts of data and recognize patterns. What sets them apart is not just how fast they can analyze data, but the fact that they can actually learn and improve upon their results as they receive more information. Deep neural networks (DNNs) have already become integral parts of most modern industries, from banking and finance, to defense, security, health and pretty much anything else you can imagine.

“Innovation can only happen as fast as you can test and analyze your design. This process is the fuel for A.I. data science.”

– Professor Salman Avestimehr

The challenge in designing and testing machine learning models, such as DNNs, is that they are gigantic in size – they consist of millions of parameters that need be trained. Furthermore, they need to be trained over enormous datasets to achieve good performance. With today’s technology, training large-scale models can take multiple days or weeks! A promising solution for handling this problem is to leverage distributed training. In fact, companies like Google have been able to harness significantly larger datasets for problems like web search in sub-second timescales. Unfortunately, for machine learning tasks, the performance benefits of using multiple computer nodes currently do not scale well with the number of machines and often a diminishing benefit is observed as we try to scale it up.

The overarching goal of this project, named DIAMOND (Distributed Training of Massive Models at Bandwidth Frontiers), is to leverage algorithmic, hardware, and system implementation innovations to break this barrier in distributed deep model training and enable two orders of magnitude speedup. These speedups are only possible because the team will be exploring radically new hardware, models, and approaches to DNN training.

Improving the speed at which DNNs can be trained and tested cannot be understated. Consider this hypothetical: A company has developed a technology which can be implemented at airports. It can scan people’s faces as they arrive and identify those who may be infected with coronavirus. For a technology like this to work, a DNN is needed. And this brings us back to that design, test, analyze, repeat pattern. In our current system, it may take engineers weeks or months to complete the process of designing, testing and redesigning the DNN. In the time it would take to train the network to handle that data and think on its own, it would be too late. In fact, motivated by the above example, the USC team also plans to develop an application scenario, named ADROIT (Anomaly Detector using Relationships over Image Traces), to demonstrate the societal impact of their innovations.

Engineering has always been, in part, about innovation. Something is made, tested, and improved upon. Without innovation, there can be no true progress – for engineers or the society in which they operate. This is not lost on Avestimehr. “Innovation can only happen as fast as you can test and analyze your design – this process is the fuel for A.I. data science,” he says. Yes, the work of these researchers could speed up the rate at which we train and test DNNs, bring down the cost of training significantly, and ultimately bring technology to society faster. But there is something more important at stake as well: Ensuring the continued ability to innovate new machine learning models and the Artificial Intelligence that relies on them.

Published on April 1st, 2020

Last updated on April 1st, 2020