A research team at USC’s Viterbi School of Engineering has created a tool to help the film industry track the depiction of violence in the language of scripts.

For many in the film industry, seeing your film landed with an NC-17 rating from the Motion Picture Association of America (MPAA) is the kiss of death. With your film no longer accessible to viewers under 17, you are presented with a choice between limited box-office takings or expensive re-editing, or even reshooting, to meet the requirements of a more palatable R rating.

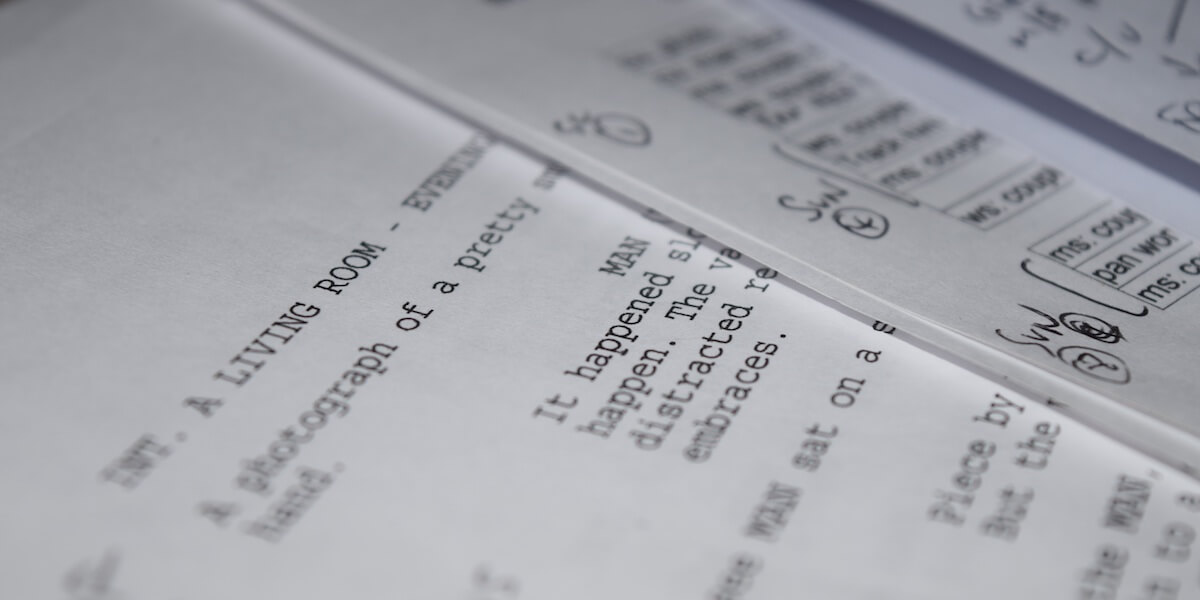

But what if there were a more accurate way to determine a film’s likely classification at the script stage, before it moves through the expensive process of production and post-production? A research team from the Signal Analysis and Interpretation Lab (SAIL) at USC’s Viterbi School of Engineering is using machine learning to analyze the depiction of violence in the language of scripts. The result is a new tool to assist producers, screenwriters and studios in determining the potential classification of their projects.

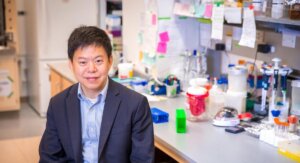

Presented at the 2019 Association for the Advancement of Artificial Intelligence (AAAI) Conference, the new AI tool was developed by PhD students Victor Martinez, Krishna Somandepalli, Karan Singla, Anil Ramakrishna, and their advisor Shrikanth Narayanan, the Niki and Max Nikias Chair in Engineering. This was done in collaboration with Yalda Uhls of Common Sense Media. The study is the first time that natural language processing has been used to identify violent language and content in movie scripts.

The research team built the script tool using machine learning language analysis of popular film scripts.

The AI tool was developed using a dataset of 732 popular film scripts that had been annotated for violent content by Common Sense Media. From this information, the team built a neural network machine learning model where algorithms intersect, work together and learn from input data (that is, the text of the scripts), in order to create an output (i.e., violence ratings for the movie). The AI tool analyzed language in the dialogue of screenplays and found that the semantics and sentiment of the language used was a strong indicator of the rated violent content in the completed films.

Narayanan and his team have been using AI to analyze human-centric data for over 15 years as part of their computational media intelligence research, which focuses on analysis of data related to film and mass media. They regularly work with partners such as The Geena Davis Institute for Gender in Media to analyze data from film and media to determine what it can reveal about representation.

Narayanan said that text analysis has a long history in the creative fields of evaluating content for hate speech, sexist and abusive language, but analyzing violence in film through the language of the script is a more complex task.

“Typically when people were studying violent scenes in media, they look for gun shots, screeching cars or crashes, someone fighting and so on. But language is more subtle. These kinds of algorithms can look at and keep track of context, not only what specific words and word choices mean. We look at it from an overarching point of view,” Narayanan said.

Martinez said that one example of the AI tool’s ability to detect implicit violence that current technology cannot detect was a portion of dialogue from The Bourne Ultimatum (2007):

“I knew it was going to end this way. It was always going to end this way…”

Martinez said that this line was flagged by the AI tool as violent, even though it does not have any explicit language markings for violence.

“In contrast to the way the MPAA rates, our models look at the actual content of the movie, and the context in which dialogue is said, to make a prediction on how violent that movie is,” Martinez said.

Somandepalli said that the research team is now using the tool to analyze how screenplays use violence in depictions of victims and perpetrators, and the demographics of those characters.

Such findings could play an important role in a post #MeToo Hollywood, with concerns about representation of women and perpetuation of negative stereotypes, and a renewed focus on strong female characters with agency.

The team anticipates that eventually this would be a tool that could be integrated into screenwriting software. Most screenwriting programs such as Final Draft or WriterDuet are already able to create reports showing the proportion of character dialogue by gender. This tool would allow content analysis in terms of the nature of violent language used by a character, displaying which characters are the perpetrators and which are the victims.

“Often there may be unconscious patterns and biases present in the script that the writer may not intend, and a tool like this will help raise awareness of that.” Narayanan said.

The study “Violence Rating Prediction from Movie Scripts” is published in the Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence.

Published on March 20th, 2019

Last updated on March 20th, 2019